Making

754 posts

Page

of 38

Sunday, January 11th, 2026 at 2:00 PM

Winamp skin shuffler

Returning to the winamp skin shuffler connected to Spotify.

Tuesday, January 6th, 2026 at 12:10 PM

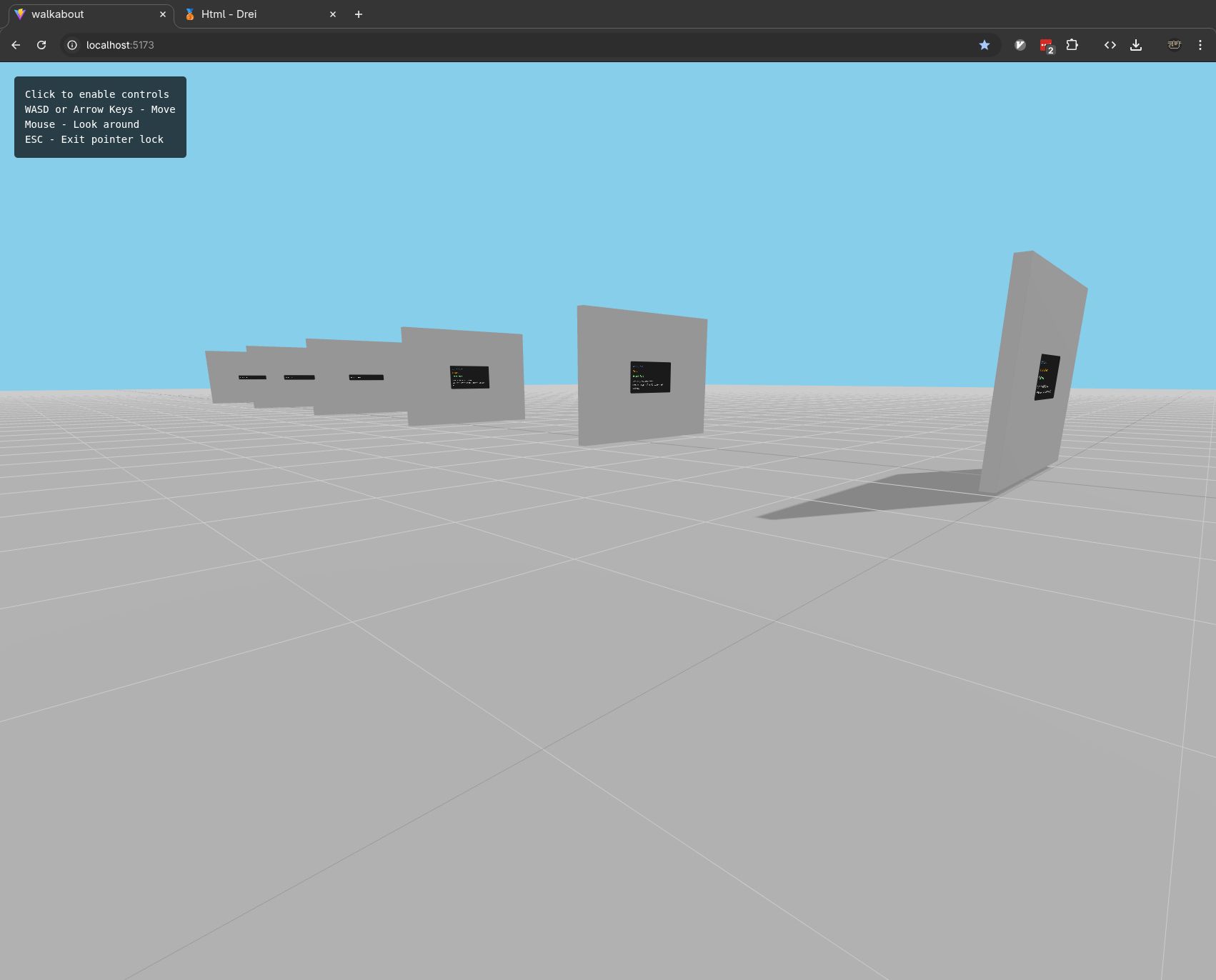

Neovim as window manager

Neovim as window manager preview

I recorded a video of my current Neovim + Niri setup: https://www.youtube.com/watch?v=pCbwL1iRWXk

Sunday, January 4th, 2026 at 6:42 PM

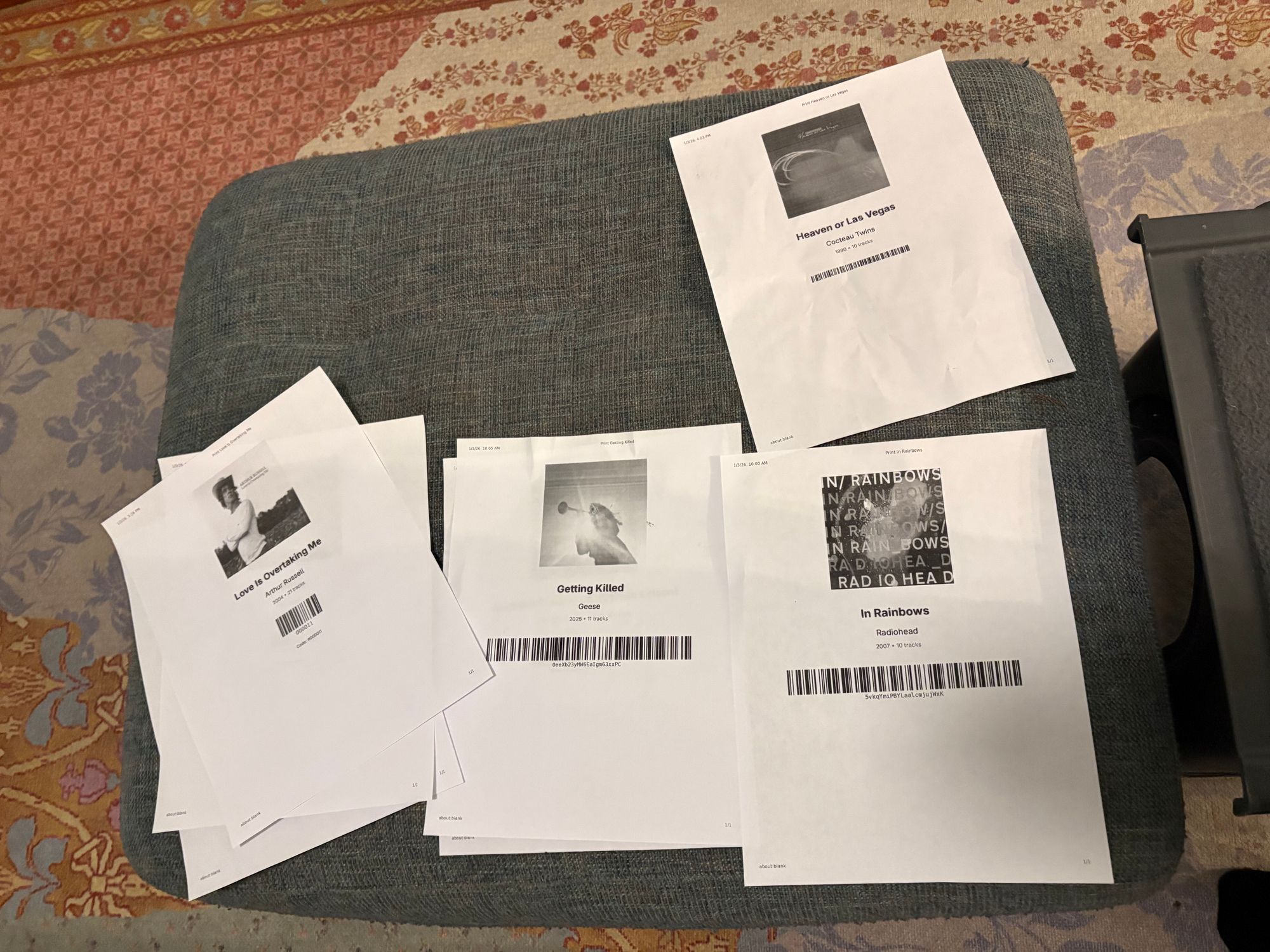

Music boxes

Testing out printing little foldable music boxes with the barcodes. Fun to try and decide on the dimensions - I'd really like a spine.

Also not sure what I want to do for structure. I put a cardboard in one of these - I bet I could cut up some scrap 1/2" plywood to use as blanks...

Read more

Saturday, January 3rd, 2026 at 5:36 PM

Barcode experiments

Testing out a barcode scanner as a way to play albums (through Spotify in this case). Wondering if this is a way to bridge from tangibility of physical media to flexibility of digital.

Working rough player scanner. TODO: I accidentally mirror-flipped this video

Spotify IDs are kind of long to do directly so switched to sequential IDs and a lookup

Spotify IDs are kind of long to do directly so switched to sequential IDs and a lookup

Read more

Thursday, December 11th, 2025 at 12:31 PM

A week of prototyping at the Creative Lab

Four recent experiments:

1. Webcam to avatar on canvas

Webcam to avatar on canvas

Read more

Wednesday, December 3rd, 2025 at 10:16 AM

Finger painting

More paint tests with the projector and touch frame.

Monday, December 1st, 2025 at 1:34 PM

Touch painting

Painting with an IR touch frame, projector, and http://image-paint.constraint.systems

paintbrush

ball

Sunday, November 30th, 2025 at 1:57 PM

More IR frame experiments

I got the projector sized to the IR touch frame. I made a desk inset for it and a wood overlay to sit on top.

The overlay makes the section non-interactive (it had to be an overlay bc the IR beams need to get from one-side to the other).

Read more

Tuesday, November 25th, 2025 at 5:02 PM

IR Screen

I ordered this IR touch screen frame not knowing what to expect and am so far pleasantly surprised. I need to map the projector to it but touch detection seems pretty good.

Monday, November 17th, 2025 at 3:08 PM

Cosine

New on Constraint Systems:

Cosine - embed and hash any text and see its nearest neighbors

https://cosine.constraint.systems

Read more

Wednesday, November 12th, 2025 at 7:54 PM

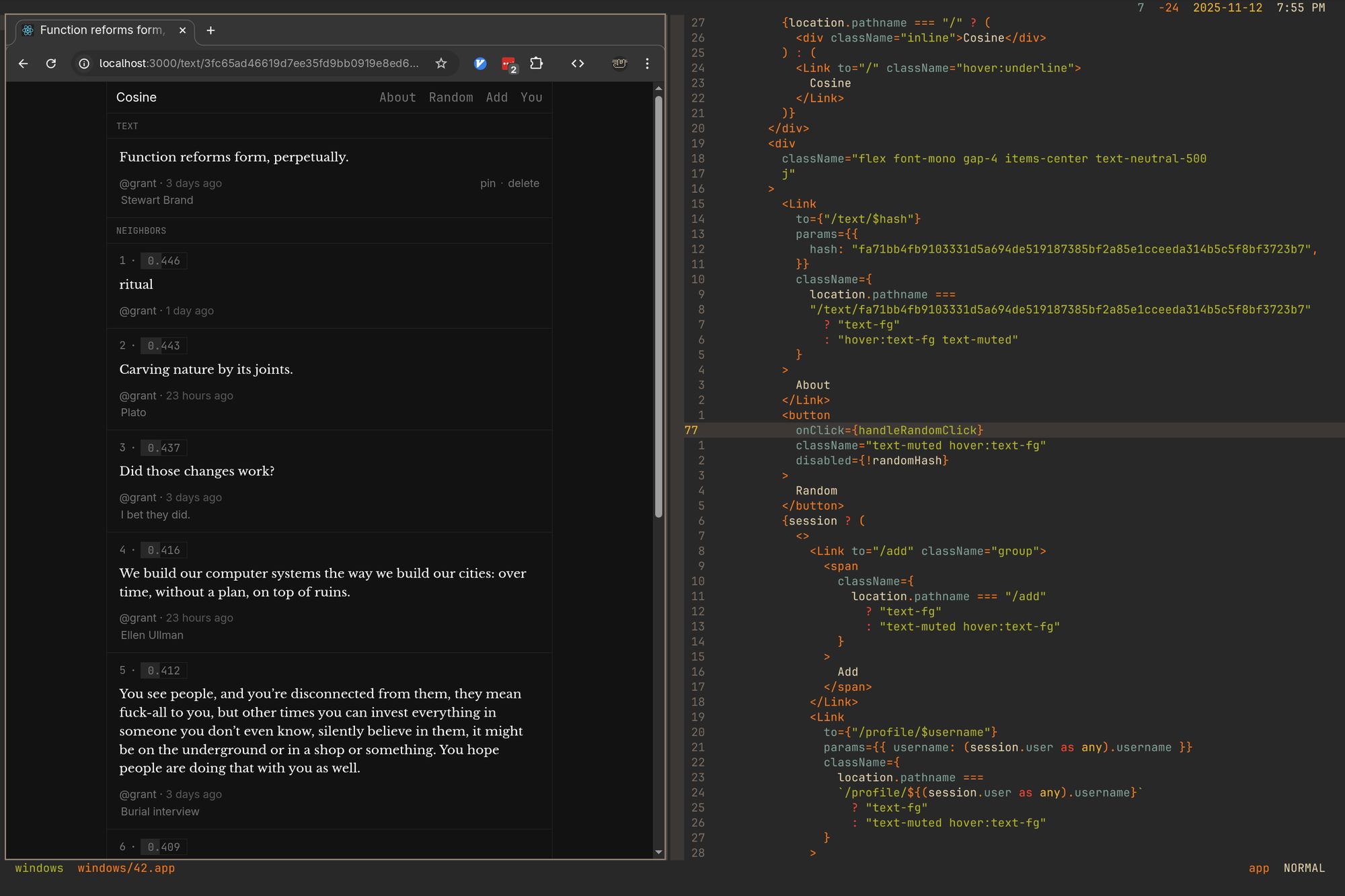

Embeddings WIP

Getting pretty close with the embeddings app.

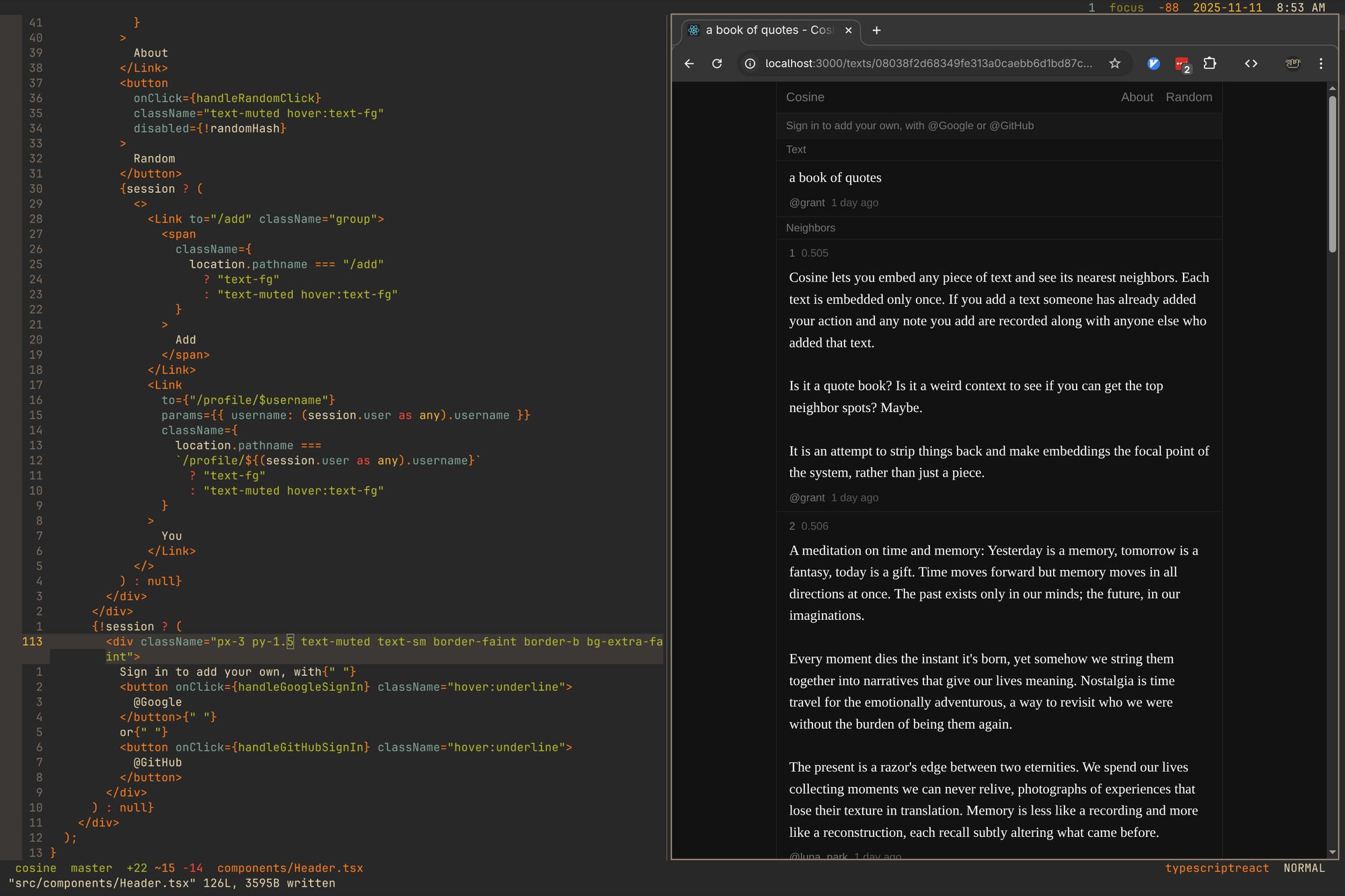

Tuesday, November 11th, 2025 at 8:53 AM

Exploring an "everything is embedded" mini/fake social network.

Thursday, November 6th, 2025 at 8:16 PM

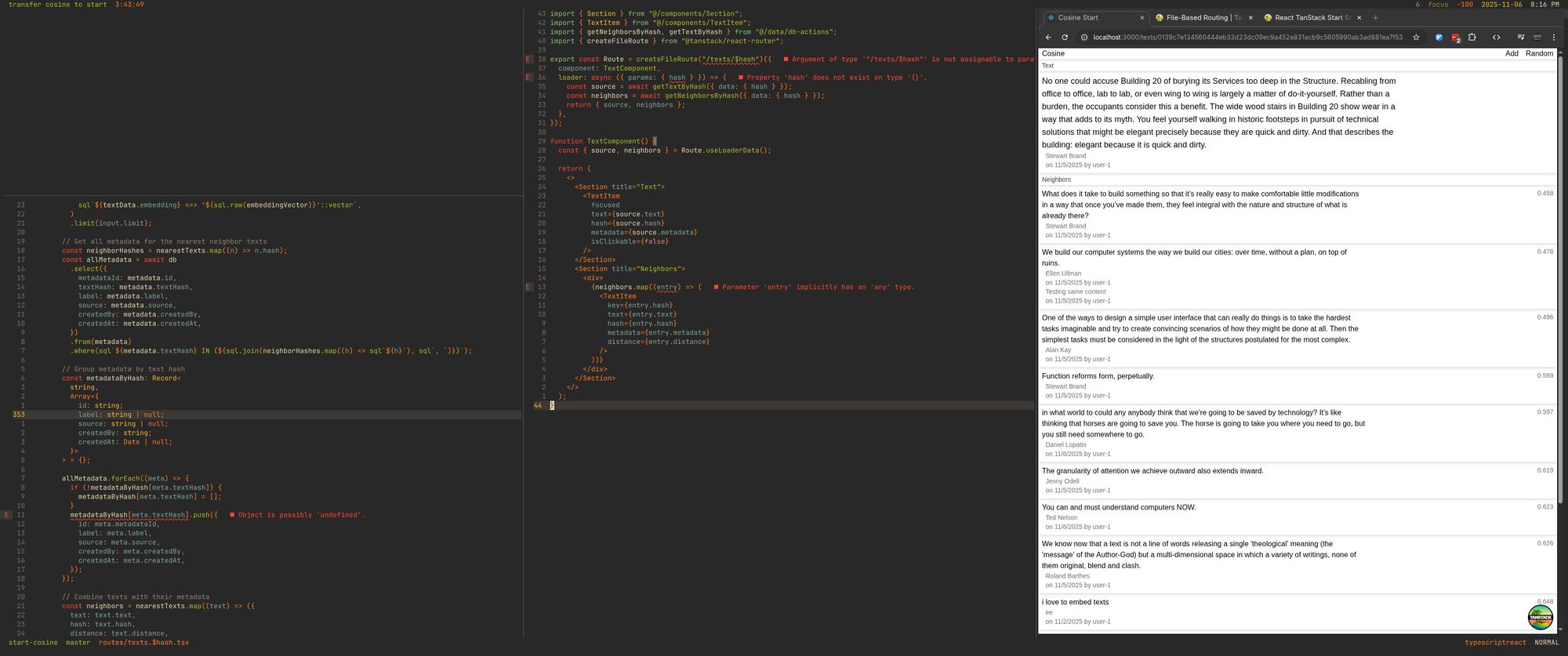

Cosine WIP

Working on a stripped down "embed anything and see its neighbors" prototype.

Need to sort out some type errors though

Need to sort out some type errors though

Thursday, November 6th, 2025 at 2:37 PM

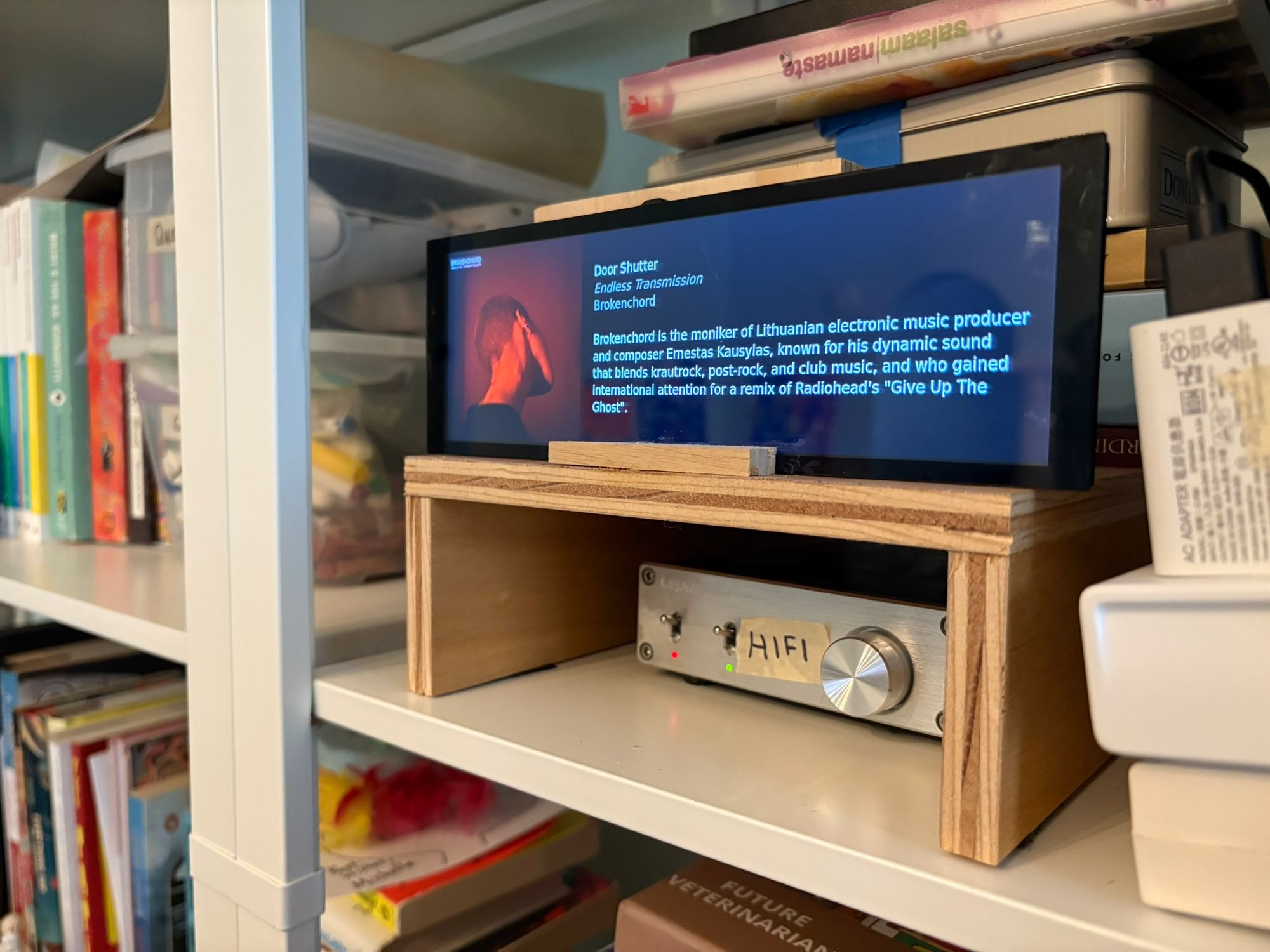

Booting to a browser for a raspberry pi 3b

Notes from putting together artist info display. This is with raspberry pi OS lite (no desktop). I ended up doing 32 bit but maybe could have done 64.

in .xinitrc, which automatically runs when x session starts. Some of this specific for this monitor.

Read more

Thursday, November 6th, 2025 at 10:45 AM

Artist info display

Album art and artist info display

Album art and artist info display

I've been listening to a lot of playlists lately and wanting a way to learn more about the currently playing artist without pulling out my phone. I put together a little raspberry pi setup to sit on our shelves and display info about the current playing artist.

I think this would be a cool fit for coffee shops or communal workspaces. Let me know if you're interested in getting something similar up and I'll try and help.

Read more

Thursday, October 30th, 2025 at 4:10 PM

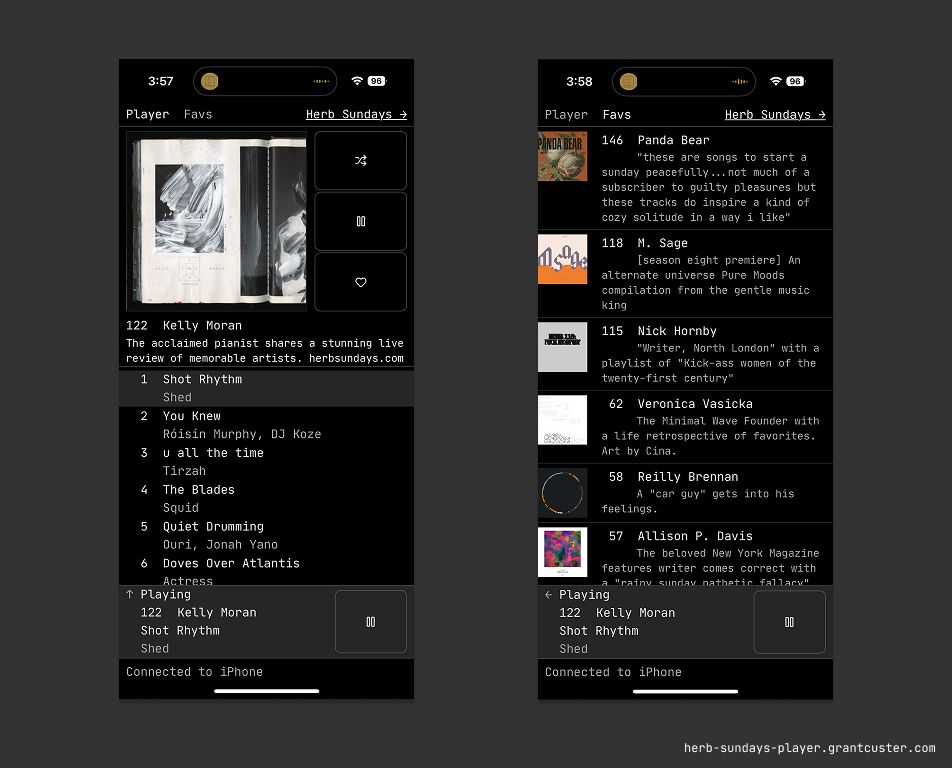

Herb Sundays Player

I made a web player for the Herb Sundays playlist series.

It's focused on surfacing a random playlist from the series. I've been using it to put something on in the morning. It's nice to have hand-curated playlists with some intention to them.

Read more

Page

of 38